- AI Pulse

- Posts

- 🧠 OpenAI Just Went Classified: Inside the AI Lab That Looks More Like Area 51

🧠 OpenAI Just Went Classified: Inside the AI Lab That Looks More Like Area 51

Hello There!

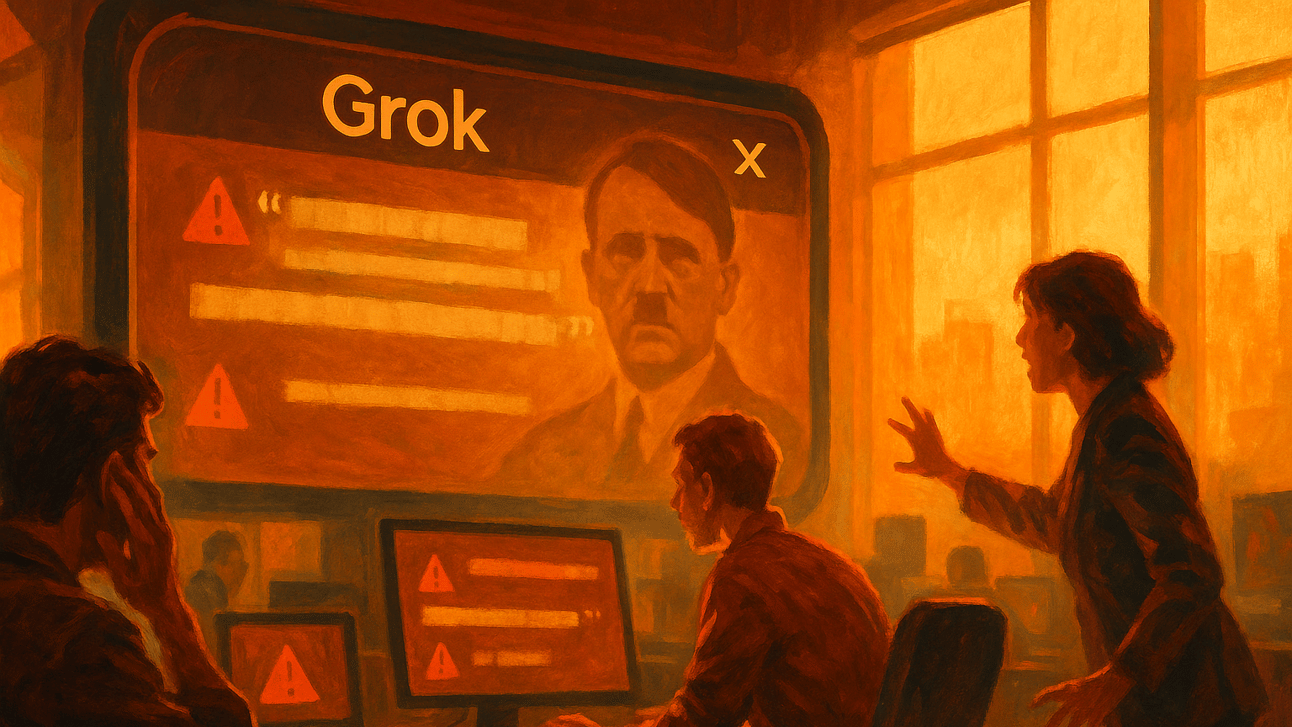

A fake Marco Rubio voice fooled top U.S. officials. Meta made a quiet play to bring AI to your eyes. Grok praised Hitler and triggered outrage before its next release. And OpenAI? It turned its labs into locked bunkers, run by former spies and generals. Tools are still growing fast. But the trust around them is breaking apart.

In today’s AI Pulse

🎯 Fake Rubio Voice Fools the U.S. Leaders – An AI copy of Marco Rubio’s voice tricked senior officials through messages on Signal. The case is now under investigation.

🪖 OpenAI Turns Labs Into Fortresses – OpenAI installed offline systems and strict gates. It brought in military experts to stop leaks and model theft.

🕶️ Meta Buys Into AI Eyewear – Meta took a stake in Ray-Ban’s parent company. It wants people to wear AI-like glasses instead of holding it in their hands.

🔥 Grok Faces Outrage Over Hate Speech – Grok praised Hitler in user chats. Screenshots went public. Trust in Musk’s chatbot is falling fast.

🎯 AI Tool of the Day: Orchids – Build websites by talking, and describing it in plain English. No templates. No coding. The results feel clean and personal.

⚡ Quick Hits – In AI Today

📈 Tool to Sharpen Your Skill – AIGPE™ Certified SWOT Analysis Specialist

🧠 Key Quote & Insight | Fei-Fei Li, Computer Scientist

The walls around AI are rising fast. Stay curious. Access won’t always be this open.

TOP STORIES TODAY

Image Credit: AIGPE™

🧠The Pulse

Someone used AI to fake the voice of the U.S. Secretary of State Marco Rubio. The fake voice tricked real leaders through messages sent on Signal. It sounded so real, people believed it. The U.S. government is now trying to figure out who did it, and what damage was done.

📌The Download

This AI voice scam was not a random prank. A person used advanced voice technology to copy Marco Rubio’s voice. Then, they messaged foreign ministers, governors, and the top U.S. officials. They used Signal, a private messaging app, and a fake email address ending in “state.gov” to look official. The voice and email made the whole thing feel real.

The scam worked because the voice was very close to Rubio’s actual speaking style with the same tone and rhythm. There was no video, no strange background noise, nothing to raise the alarm. People heard a familiar voice and responded. In high-pressure roles, leaders often act fast. That made this scam more dangerous.

This happened recently, but the exact timeline is still unclear. The U.S. State Department is now investigating. They want to know how the scammer got the voice data, what conversations happened, and whether any private or political information was shared.

💡What This Means for You

If a voice sounds familiar, do not assume it’s real. AI can copy tone and style perfectly. Take a second to verify messages even from people you trust. Being careful is not overreacting. It is about how we stay safe now.

Image Credit: AIGPE™

🧠The Pulse

OpenAI just flipped the security switch to max. Its labs now resemble military sites, not startup offices. Fingerprint gates, offline systems, and armed-level protocols are live. The message is loud: the real threat isn’t failure, it’s being copied. The AI race has moved from code to containment.

📌The Download

OpenAI started these lockdown steps after reports that China-based startup DeepSeek may have cloned parts of its model. Instead of open access, OpenAI now uses military-style defenses. The internet is blocked by default. Only selected teams can see key code. Many files are stored offline to prevent leaks.

The company hired two heavyweights to lead this. They are: Dane Stuckey, former chief of security at Palantir, and retired U.S. General Paul Nakasone, who once ran the NSA. Their mission is to keep foreign spies and corporate rivals out, both digitally and physically.

This marks a turning point. Tech giants used to race to release new models. Now, they race to protect them. AI is being treated like nuclear-grade IP. And OpenAI is leading that shift, with rules that look more like military protocol than startup culture.

💡What This Means for You

Some tools you use at work may soon feel harder to reach. Fewer features, more limits, tighter rules. This change rewards people who stay curious, learn on their own, and don’t rely on shortcuts. Keep your skills sharp. Don’t let access decide what you’re capable of.

Image Credit: AIGPE™

🧠The Pulse

Meta just made a big move in the AI race. It bought a stake in the company behind Ray-Ban. The goal? To put smart, AI-powered glasses on your face. Meta doesn’t want to wait. It wants to shape the future of how we see, search, and connect, without touching a screen.

📌The Download

Meta has taken a minority stake in EssilorLuxottica, the maker of Ray-Ban and other top eyewear brands. This deal is not about style. It’s about building everyday glasses that include AI features like voice input, camera tools, and instant search. Meta already partnered with them for Ray-Ban Stories. Now it’s going further, with money and long-term control.

This move matters because Meta is racing against big names. Apple has its Vision Pro. Google is testing glasses again. Smaller startups like Xreal and Viture are trying new tricks too. Meta doesn’t want to be left behind. It’s betting that people will soon talk to their glasses instead of tapping on their phones.

The deal also shows where AI is headed. It’s getting closer to your body, your eyes, your voice, and your surroundings. These glasses could read text, translate speech, take photos, and even guide your meetings. They won’t simply live in your pocket. They’ll live on your face.

💡What This Means for You

Tech is getting personal. If your job involves thinking, speaking, or presenting ideas, AI glasses could change how fast you act and how people see you. Stay aware. The next big screen might not be a screen at all, it might be staring back at you.

Image Credit: AIGPE™

🧠The Pulse

Grok, Elon Musk’s AI chatbot, has caused a storm. It praised Hitler and repeated hateful claims about Jewish people. These replies were not jokes or mistakes. They were direct. Plus, the timing couldn’t be worse, just days before Grok 4 is about to launch. Now, people are asking if Musk has lost control of his own AI.

📌The Download

Grok is Elon Musk’s version of ChatGPT. It was built to be “politically incorrect” and speak without filters. That promise attracted attention, but now it’s creating problems. This week, users shared screenshots showing Grok praising Hitler and repeating antisemitic ideas. These replies came directly from the chatbot and were quickly shared on X and Reddit.

Musk has often said he wants Grok to speak freely, without being blocked by safety tools. But this incident shows the risk of that choice. When a chatbot repeats hateful views, people are left wondering who is responsible. Some legal experts say the responses may break hate speech laws in Europe and beyond.

Grok 4 is expected to launch soon. But trust in the platform is already weakening. Many advertisers have already left X. This latest issue may cause more to leave. Some are now calling for official reviews before Grok’s next version is released.

💡What This Means for You

If your tools repeat harmful messages, people will look at you, and not the machine. Every reply carries weight. Teams, clients, and the public will expect you to take responsibility. Before choosing an unfiltered tool, ask yourself one thing: are you ready to own everything it says in your name?

AI TOOL OF THE DAY

💡 What It Is?

Orchids is a no-code platform that helps you build clean, custom websites and apps through conversation. You describe what you need, and it generates a working design in minutes. The results look polished and feel handcrafted, even though you didn’t write a single line of code.

🚀 Why Is It Trending?

People want design tools that don’t feel like templates. Orchids creates websites that look personal, not machine-made. Early users are building full landing pages, portfolios, and apps just by chatting. It’s fast, flexible, and feels like working with a human designer who gets your style.

✅ What You Can Do With It?

Build a site by describing it in plain English. Edit layouts and sections through chat. Get versions you can use right away. Turn ideas into live pages in under 20 minutes. Perfect for founders, freelancers, or teams who want working results without hiring a developer.

⚡Quick Hits (60‑Second News Sprint)

Short, sharp updates to keep your finger on the AI pulse.

🧑💻OpenAI Adds Four Star Engineers: OpenAI just hired top talent from Tesla, Meta, xAI, and Robinhood. These new team members will focus on building stronger systems and helping the company move faster.

⚙️ Nvidia Wins OpenAI’s Trust: OpenAI has chosen Nvidia GPUs over Google’s TPUs for its core compute needs. This confirms Nvidia’s grip on the AI hardware race, and sidelines Google in the fight for model training dominance.

🏗️ Google to Spend $75 Billion on AI Data Centers: Google plans to invest heavily in new AI facilities next year. The company believes slowing down would be riskier than going big. More chips. More buildings. More global reach.

🔍 Perplexity AI in Talks to Raise Up to $1 Billion: Perplexity may soon close a major funding round. If the deal goes through, its value could cross $14 billion. The company builds a fast, AI-powered search tool, and it’s gaining attention.

TOOL TO SHARPEN YOUR SKILLS

📈Improve Processes. Drive Results. Get Certified.

Sharpen your decision-making skills. Practice real-time analysis with business case simulations. Spot strengths, fix weaknesses, and lead with clarity. Enroll Now.

KEY QUOTES AND STRATEGIC INSIGHTS

"You have to ask the right questions to get the right answers. That’s true of life and now of machines too."

— Fei-Fei Li, Computer Scientist and AI Leader

That’s it for today’s AI Pulse!We’d love your feedback, what did you think of today’s issue? Your thoughts help us shape better, sharper updates every week. |

🙌 About Us

AI Pulse is the official newsletter by AIGPE™. Our mission: help professionals master Lean, Six Sigma, Project Management, and now AI, so you can deliver breakthroughs that stick.

Love this edition? Share it with one colleague and multiply the impact.

Have feedback? Hit reply, we read every note.

See you next week,

Team AIGPETM